Neural Genetic Hybrids

This software provides libraries for use in Python programs to build hybrids of neural networks and genetic algorithms and/or genetic programming. While both techniques are useful in their own rights, combining the two enables greater flexibility to solve difficult problems. This version uses Grammatical Evolution for the genetic algorithm/programming portion.

To ensure a reasonable learning curve, there are a number of default values and design choices that enable an initial usage without knowing all of the details. As more experience is gained, many settings can be altered to better match the characteristics of the problem that you are trying to solve.

While neural networks can handle many circumstances, a number of search spaces are beyond reach of the backpropagation technique used in most neural networks. This is chiefly due to being trapped in local minima. Genetic algorithms are introduced into the mix, because more spaces can be effectively searched. By using genetic algorithms as a wrap around process to neural networks, the fitness selection function is the ultimate arbiter of a desirable solution. Multi-objective problems become more tractable as well.

The process cycle for a hybrid is:

- Create a grammatical evolutionary framework.

- Specify a BNF that could result in a neural network that could solve your problem

- Determine what fitness selection criteria to include.

- Determine on what basis replacements will take place.

- Determine an end game criteria, whether fitness value, number of generations or both.

- Run

Genetic Algorithms

Genetic algorithms are derived from biological themes associated with DNA. A series of genotypes are generated, which are random strings of ones and zeros. The group of them constitute a population. To get from random strings to solving a problem, there needs to be a mechanism, similar to transcription, which maps the unique combination of ones and zeros to a process which solves the problem. This is called a fitness function.

The fitness function takes the ones and zeros, and implements a solution. By calculating a fitness function for each genotype, the quality of the solution that a genotype encodes is determined. Some solutions are going to be better than others. The fitness selection process selects some of the best genotypes and combines features from pairs of those, some are mutated slightly by changing a 1 to 0 or vice versa, and the worst are replaced. This cycle is referred to as a generation. This cycle is then repeated. Over time, the solutions that are closer to solving the problem become more common. Eventually, the problem is sufficiently solved and the process completes. The particular flavor of genetic algorithms/programming used is a technique called grammatical evolution.

Grammatical evolution uses a grammar in a Backus-Naur form, which defines variables and statements, using the genotype as selector of the form. The met hod is particularly useful because it can produce syntactically correct programs, and enables a great deal of control over output from modest changes to fixed sets of statements to a wildly unconstrained profusion of code.

Implementation

This implementation of grammatical evolution in Python:

- Supports wrapping of the genotype while mapping

- Can extend the genotype length while wrapping, allowing more nuanced crossovers and mutations

- Supports runtime mapping of variables. The program is not simply predefined and run, but can continue to pull random but reproducible variables and functions during runtime.

- There are a number of fitness selection options, such as Elite, Tournaments, Fitness Proportionate, and Linear Ranking.

- Mutations can be applied once per genotype, or apply a low mutation probability per genotype bit.

- Crossovers have a choice of one or two children.

Grammatical Evolution Tutorial

Neural Networks

Neural networks can be used to solve a variety of problems that are difficult to solve in other fashions. The approach uses supervised learning. The basic motif is to gather a set of inputs and a set of target outputs and the network builds a bridge between the two. Such networks are chiefly used to solve non-linear problems.

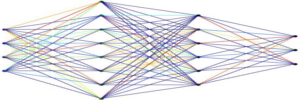

Neural networks are roughly based on biological metaphors for nerves. The neural network accepts inputs and applies a weighting system to calculate a series of intermediate values stored in what are termed nodes or neurons. To extend the biological metaphor, the value may be sufficient to cause the neuron to fire or activate, resulting in an activation value from the neuron that is greater than the sum of the weights received by the neuron. Because of this activation feature a non-linear result is possible.

By comparing the outputs to the target values, an error gradient is created. Using a technique call back propagation, the weights of the nodes leading up to the output are proportionally adjusted according to the error curve slope. By successively cycling inputs and targets, and adjustments to the weights, the network weights are slowly shifted so that outputs of the network more closely mirror the desired target values.

Implementation

This implementation supports various kinds of network structures and neuron types:

Network structures:

- Multiple hidden layers

- Sparse networks

- Skip layer connections

- Recurrent networks

Neuron types:

- Sigmoidal, tanh, and linear activation functions

- Activation functions can be set by node or layer

- Easily subclassed custom activation functions

Processing:

- Test input can be randomly processed for each epoch